The Most Important Thing: A Moral Code for AI

The most important question in AI today is not who wins the arms race to build better foundation models. It is whether you can insert a moral code into AI before the genie is out of the bottle.

In only two months since ChatGPT was released, it became the fastest product to 100M+ users in history (TikTok took about 9 months, while Instagram took around 2½ years.1

The experience of using ChatGPT is truly magical. It feels like having a real conversation with another person, albeit one who had access to a wealth of knowledge and information at their fingertips. Researchers have described it has having the reasoning of a 10-year old, albeit one who has the whole internet downloaded into her brain.

And this has predictably set off an Arms Race, one in which the US is ahead, but China is not far behind.

But the most important question in AI today is not who wins the arms race to build better foundation models. It is whether you can insert a moral code into AI before we let the genie out of the bottle.

LLMs (Large Language Models) are an application of Generative Pretrained Transformer (a branch of deep learning) — programs that need no human supervision. But LLMs, like ChatGPT, don't actually engage in conversations as we might think. Instead, they use extensive training from huge datasets to create sentences. By applying statistical and probability techniques, these models predict and select words one by one, eventually forming sentences they didn't initially "plan" to create.

This process continues, producing more sentences that convey some type of information, regardless of its accuracy or truthfulness. LLMs don't care if the content they generate is true, accurate, or real, and they don't conceptually understand it. However, they excel at assembling language to express ideas they don't comprehend. And things could go horribly wrong.

Chatbots, such as ChatGPT, do not “know” what they are saying. They have no “prethought” guiding what they write. They operate on probability models, not whole- answer concepts, to come up with the next word to complete a sentence (but not to complete a thought), which is all dependent on the database from which it extracts the pieces of language that it compiles into its “knowledge.” The key point is that LLM robots are indifferent as to whether what they produce is accurate, reliable or real. They are, as techies like to fantasize, merely“hallucinating” what they produce and will even fabricate whole studies to support their erroneous conclusions. (New York Times, 2/20/23)

This is a repeat of big tech’s (and venture capitalists’) past errors: favoring growth and speed over safety. Even the cofounder and chief executive of DeepMind, whose AI chatbot competes with ChatGPT, said: “When it comes to very powerful

technologies – and obviously AI is going to be one of the most powerful

ever – we need to be careful. Not everybody is thinking about those

[risks]. It’s like experimentalists, many of whom don’t realize they’re

holding dangerous material.” (Time, 2/27/23)Even after admissions of errors and warnings of risks became known, some magazines, such as Sports Illustrated and Men’s Journal, decided to use the

technology anyway to “write” articles for publication. Men’s Journal’s first

article, entitled “What All Men Should Know about Low Testosterone,” reviewed by the chief of medicine at the University of Washington Medical Center,contained at least 18 persistent factual mistakes as well as mischaracterizations of medical science. (Futurism, 2/9/23)

The Code of Hammurabi is one of the oldest known legal codes in human history. It was introduced around 1754 BCE by Babylonian King Hammurabi, who ruled ancient Mesopotamia (present-day Iraq) from 1792 to 1750 BCE. The code consists of 282 laws inscribed on a stone stele.

Law 229 of Hammurabi's code is described by Nassim Taleb as “the best risk-management rule ever.” The code was designed to ensure that builders took their work seriously and built safe structures. If the builder knew that their life was on the line, they would be more careful.

The Hippocratic Oath is one of the oldest binding documents in history and is still held sacred by physicians.

While we're all familiar with "primum non nocere" or "first, do no harm", this phrase is actually from one of his other works 'Of the Epidemics'.

Isaac Asimov laid out the Three Laws of Robotics in the Foundation series as far back as 1942.

The First Law is just as applicable to AI:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

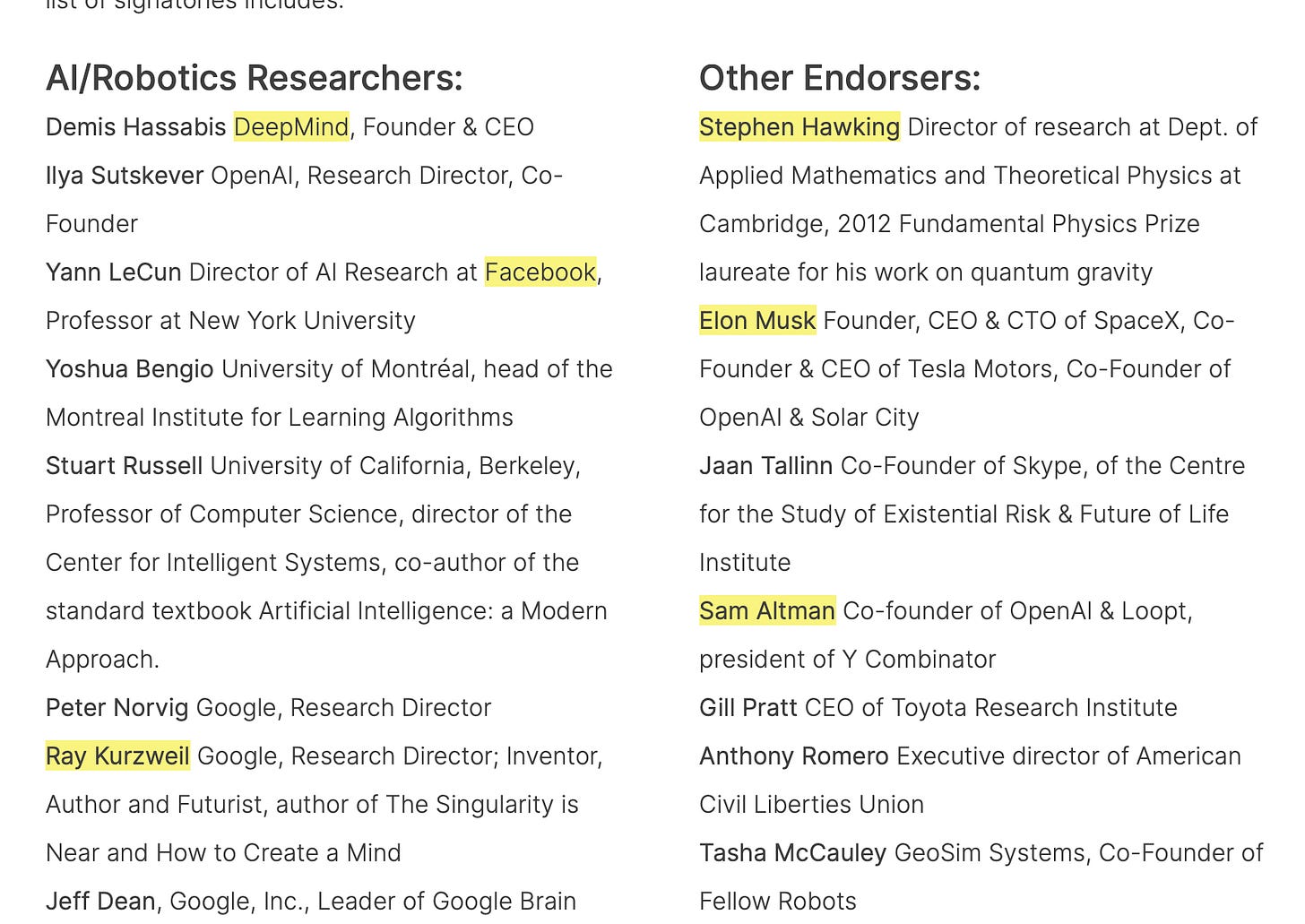

We’ve been following moral codes since the dawn of human time, and there’s no reason why we shouldn't follow a moral code for AI. The 2017 Asilomar AI Principles are endorsed by the who's who of AI, including Sam Altman at OpenAI.

Every single company developing a foundation model even if only for internal use to unlock value inside the enterprise should be asked to publicly sign the 2017 Asilomar AI Principles pledge at https://futureoflife.org/open-letter/ai-principles/

PS: Pashanomics AI Realist Manifesto is a worthwhile read on the moral code for AI.

https://www.reuters.com/technology/chatgpt-sets-record-fastest-growing-user-base-analyst-note-2023-02-01/